The experiment was more than a parlor trick. Every millimeter-accurate coordinate that makes a rhythm game feel responsive also doubles as a biometric fingerprint. That same stream can train warehouse robots or reveal a tremor that hints at Parkinson’s disease—often without the player’s knowledge. The clash between immersive design, industrial ambition, and privacy law now shapes a global policy fight.

What VR Headsets Actually Capture

Many consumer headsets constantly log six degrees of freedom—position and rotation along three axes—for the visor and each controller on nearly every rendered frame. Because the sampling rate often tops 70 times per second, the output is a continuous map of fine-grained human motion.

Newer models pile on eye-tracking for foveated rendering, facial electromyography for expressive avatars, photoplethysmography for heart-rate, and spatial scans of every wall and couch. A 2025 study in Virtual Reality showed that gaze vectors alone can single out users mingling in a social-VR lounge.

Because those coordinates arrive dozens of times per second, privacy scholars say the streams qualify as "indirect identifiers"—data that trace back to a person even after names or IP addresses are stripped away. “Natural body movements are so important that the medium literally can’t run if you turn off movement tracking,” Stanford professor Jeremy Bailenson told Stanford News in May 2025.

What began as a technical necessity for immersion has quietly become a dense biometric vein—one regulators are only starting to mine.

More Technology Articles

Motion Data as a Biometric Fingerprint

The Berkeley Beat Saber paper was hardly the first flare. In 2020, Stanford’s Virtual Human Interaction Lab re-identified 95 percent of 511 participants who merely watched a 360-degree video after the model was primed with less than five minutes of their earlier tracking data, according to Scientific Reports.

More recent attacks fuse multiple sensors. A November 2024 pre-print by Aziz and Komogortsev combined eye-gaze and hand trajectories to bypass common anonymization tricks across several headsets. Removing usernames or swapping device IDs offered little protection when the body itself functions as the login.

When researchers tried to follow people across unrelated apps, accuracy dipped, but multimodal models erased that advantage. A framework nicknamed BehaVR analyzed hand-joint and facial data, with facial cues alone achieving near-perfect scores in controlled trials.

Taken together, the literature leaves little doubt: raw motion streams act as stable biometric fingerprints even when captured for entertainment.

From Games to Robot Laboratories

Robotics engineers crave exactly the trajectories that VR collects by default. Synthetic simulations teach robots the basics, but fine motor skills come from imitation learning—algorithms that copy countless human attempts at grasping, stacking, or balancing.

An August 2024 post on the HTC VIVE developer blog framed consumer headsets as a “direct gateway” for warehouse picking and elder-care robots, arguing that every virtual slice or pinch can improve physical dexterity in the real world.

For platform owners, the incentive is clear: more data fuels better robots and future licensing deals. For gamers, the bargain often hides deep in privacy policies written for lawyers, not players, creating consent without comprehension.

The result is a double use-case: one dataset thrills graphics programmers and roboticists alike, yet it was collected through a single click-through agreement that rarely mentions warehouse automation.

Ethical Risks That Keep Researchers Up at Night

Re-identification leads the list. Stripping obvious labels does not anonymize a string of kinematic coordinates; the motion itself is the password.

Second comes health inference. Slight tremors, asymmetric gait, or unusual postural sway can hint at Parkinson’s disease or intoxication—categories the XRSI Privacy Framework classifies as sensitive medical data.

Eye-tracking adds emotional analytics. Prolonged stare duration can flag fatigue or stress, opening a back door for covert mood profiling in advertising or workplace monitoring.

Room-scanning headsets raise a fourth concern: inadvertent mapping of private homes. Coupled with precise head position, those scans help attackers reconstruct floor plans—a surveillance windfall.

Finally, bystander capture means anyone who wanders into the play space—friends, children, delivery workers—may be recorded and analyzed without ever donning a visor, making consent nearly impossible.

Regulators Turn Up the Heat

In the United States, regulators have started labeling behavioral trails as sensitive. A January 2024 Federal Trade Commission order bars data broker InMarket from selling precise location histories and forces deletion of existing troves. FTC Chair Lina Khan said the case proves companies "do not have free license to monetize data tracking people’s precise location," according to the agency’s press release.

California’s Delete Act (SB 362) goes further by promising residents a one-click tool to purge data from every registered broker. Draft rules for the Delete Request and Opt-Out Platform opened for public comment on 25 April 2025, but as of October 2025 the California Privacy Protection Agency had yet to set a launch date, according to agency board memos.

Across the Atlantic, the European Union’s Artificial Intelligence Act began phasing in on 2 February 2025. The law bans emotion-recognition software in workplaces and schools and forbids untargeted scraping for facial-recognition databases. Obligations for so-called general-purpose AI start in August 2025, with high-risk systems following two years later, notes a European Commission factsheet.

Individual U.S. states already treat certain biometrics as highly protected. Illinois’s Biometric Information Privacy Act and Texas’s Capture or Use of Biometric Identifier Act were written for fingerprints and face scans but may soon be tested on VR motion data.

The patchwork leaves companies chasing a moving compliance target while users confront disclosures few can decipher. Yet the momentum is clear: regulators are shifting from aspirational guidelines to fines and forced data deletion.

Voluntary Standards and Technical Fixes

Civil-society groups have jumped ahead of legislatures. On 12 June 2025, non-profit XR Safety Intelligence launched its Responsible Data Governance™ 2025 v1 certification, offering an auditable rubric for immersive-data hygiene. “RDG™ is how we move from aspiration to accountability,” founder Kavya Pearlman said at the launch.

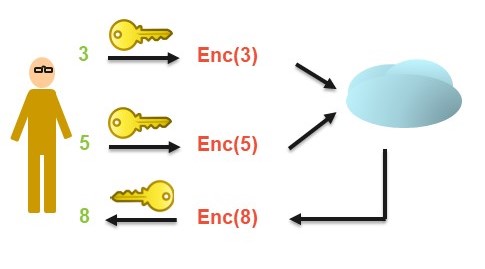

The IEEE is drafting P7016, a standard for ethically aligned design and operation of metaverse systems, which aims to embed privacy checkpoints inside every metaverse product cycle; the project was authorized in February 2023 on the IEEE Standards site. A companion white paper explores zero-knowledge proofs for skeletal data, warning of "the erosion of anonymity in XR."

Technical mitigations exist. On-device aggregation keeps raw logs local, differential-privacy noise masks quirks, and federated learning trains models without centralizing data. Yet each method adds latency or erodes accuracy, and none ship as defaults on mainstream headsets.

Until hard-engineering fixes reach commercial firmware, certification schemes like XRSI RDG or IEEE P7016 may serve as interim guardrails—provided companies choose to adopt them.

Still missing is a universal legal definition of "sensitive biometric," leaving both developers and regulators guessing where motion streams sit on the privacy spectrum.

Stakeholder Tug-of-War

Players increasingly want plain-language dashboards that explain who collects motion data and why. Many say they would gladly disable robot-training reuse if given the option.

Developers counter that throttling sensor precision would break hand presence and blow latency budgets. Robotics labs argue that without high-fidelity traces, dexterous grasping will plateau.

Platform providers sit uneasily in the middle—reluctant to scare consumers with the full extent of data collection yet eager to monetize the trove for next-generation AI.

Regulators, for their part, increasingly cite the global race to weaponize behavioral analytics in advertising as proof that self-policing no longer suffices.

Five Research-Based Observations

First, current studies describe raw body-tracking streams as a form of sensitive biometric data, comparable to fingerprints or facial imagery, and subject to similar privacy concerns.

Second, researchers note that consent mechanisms often conflate gameplay, analytics, and secondary research uses, making activity-specific and revocable consent a recurring recommendation.

Third, many papers emphasize purpose limitation: motion data gathered for entertainment is frequently repurposed for unrelated research or product development without renewed authorization.

Fourth, independent audits under frameworks such as XRSI RDG or IEEE P7016 are cited as practical tools for demonstrating compliance beyond self-reporting.

Finally, technical work continues on methods that minimize external data exposure—processing information locally or reducing the persistence of raw motion logs—while maintaining interactive performance.

Where Momentum and Consent Collide

Body-tracking data is the lifeblood of immersive computing—and a lucrative biometric. As headsets morph from gaming gear into robot-training portals, the stakes only rise.

Guardrails erected in 2024-25 suggest innovation and autonomy can coexist, but only if motion streams shed their cloak of invisibility. The next time you slash a virtual saber, remember you may also be coaching the robot that delivers tomorrow’s package.

Sources

- Nair V. et al. "Unique Identification of Virtual Reality Users From Head & Hand Motion Data." USENIX Security 2023.

- Miller M.R. et al. "Personal Identifiability of User-Tracking Data During Observation of 360-Degree VR Video." Scientific Reports 2020.

- Boyd B. et al. "Identifying Users in Social VR From Eye-Gaze Patterns." Virtual Reality 2025.

- Aziz T. & Komogortsev O. "Cross-Platform Attack Using Eye and Hand Tracking." arXiv 2411.12766 (2024).

- Schach L. et al. "Motion-Based User Identification Across XR Applications." arXiv 2509.08539 (2025).

- Jarin I. et al. "BehaVR: User Identification Based on VR Sensor Data." arXiv 2308.07304 (2023).

- HTC VIVE Blog. "The Hidden Role of Virtual Reality in Humanoid Robot Learning and Training." 15 Aug 2024.

- Federal Trade Commission. "FTC Order Will Ban InMarket From Selling Precise Consumer Location Data." 18 Jan 2024.

- California Privacy Protection Agency. "Proposed DROP Regulations." 25 Apr 2025.

- European Commission. "Regulatory Framework: Artificial Intelligence." 2024.

- XR Safety Intelligence. "XRSI Launches the Responsible Data Governance™ Standard." 12 Jun 2025.

- IEEE Standards Association. "P7016 – Standard for Ethically Aligned Design and Operation of Metaverse Systems." 2023.