Human VR data, with its rich diversity of manipulation behaviors, is proving invaluable for training robots. Frameworks like MotionTrans utilize multi-task human-robot cotraining to enable robots to learn new motions for task completion. This method involves a data collection system, a human data transformation pipeline, and a weighted cotraining strategy, as highlighted by a study submitted on arxiv.org.

In-context learning (ICL) is emerging as a promising framework for humanoid robot manipulation. Projects like MimicDroid use human play videos as a scalable and diverse training data source. This approach allows humanoids to perform ICL, adapting to novel objects and environments at test time, as noted in a paper submitted on arxiv.org.

Motion capture technology is crucial for training humanoid robots to mimic human motion with high precision. Companies like Movella are at the forefront of this technology, enabling robots to replicate human movements accurately. Modern humanoid robots are increasingly mimicking human motion with high precision, thanks to the unique tandem of motion capture technology and AI learning algorithms, as mentioned on Movella.

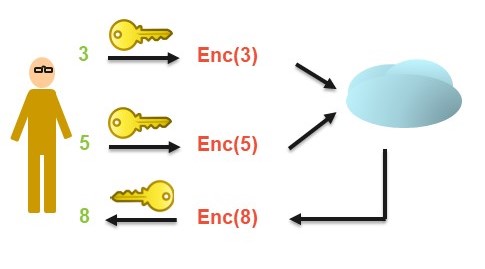

Innovative data collection methods, such as those used by Phantom, eliminate the need for robot-specific data by leveraging human video demonstrations. This approach involves training imitation learning policies on human data, allowing for zero-shot deployment on robots. Scaling real robot data is a key bottleneck in imitation learning, leading to the use of auxiliary data for policy training, as discussed in a study submitted on arxiv.org.

Additionally, synthetic motion generation pipelines like those developed by NVIDIA Isaac GR00T can create large amounts of data from a small number of human demonstrations, exponentially increasing the available training data. The NVIDIA Isaac GR00T blueprint generates synthetic motion trajectories for robot manipulation using a small number of human demonstrations, creating exponentially large amounts of data, as mentioned by Connor Smith on NVIDIA Developer.

The Role of VR in Robotic Learning

Virtual reality (VR) has emerged as a powerful tool in robotic learning, providing a rich source of human motion data. VR environments allow for the capture of complex human movements and interactions, which can then be used to train robots. This method is particularly effective because it provides a diverse range of manipulation behaviors that can be translated into robotic actions.

Frameworks like MotionTrans leverage VR data to enable multi-task human-robot cotraining. This approach involves collecting human motion data through VR, transforming it into a format that robots can understand, and then using it to train robotic manipulation policies. The weighted cotraining strategy ensures that the robot learns to perform tasks with high accuracy and efficiency.

The use of VR data in robotic training is not just a speculative idea but a reality that is being actively explored and implemented. As research and technology continue to advance, the potential for human motion data to revolutionize robotic manipulation policies becomes increasingly clear.

More Technology Articles

In-Context Learning for Humanoid Robots

In-context learning (ICL) is another promising approach in the field of humanoid robot manipulation. This method allows robots to adapt to new objects and environments in real-time, using human play videos as a training data source. Projects like MimicDroid have demonstrated the effectiveness of ICL, showing that humanoids can perform complex tasks with high success rates.

MimicDroid uses a combination of kinematic similarity and random patch masking to reduce overfitting and improve robustness. This approach has been shown to outperform state-of-the-art methods, achieving nearly twofold higher success rates in real-world applications. The project also introduces an open-source simulation benchmark with increasing levels of generalization difficulty, further advancing the field of ICL.

Motion Capture Technology

Motion capture technology plays a critical role in training humanoid robots to mimic human motion with high precision. Companies like Movella are leading the way in this area, using advanced motion capture systems to enable robots to replicate human movements accurately.

The unique tandem of motion capture technology and AI learning algorithms has led to significant advancements in robotic manipulation. Modern humanoid robots are increasingly capable of performing complex tasks such as staircase ascents and descents, sitting and standing from chairs and benches, and other dynamic whole-body skills. This is all made possible by a single policy conditioned on the environment and global root commands.

Synthetic Motion Generation

Synthetic motion generation is another innovative approach that is revolutionizing robotic learning. This method involves creating large amounts of training data from a small number of human demonstrations, exponentially increasing the available data for training robots.

NVIDIA Isaac GR00T has developed a synthetic motion generation pipeline that generates synthetic motion trajectories for robot manipulation. This approach has been shown to achieve similar success rates using a combination of a few human demonstrations and synthetic data, reducing data collection time from hours to minutes. This method is particularly effective in overcoming the bottleneck of scaling real robot data in imitation learning.

These advancements highlight the growing intersection of human motion data and robotic learning, paving the way for more efficient and adaptable robotic systems.

The use of human motion data in robotic training is not just a speculative idea but a reality that is being actively explored and implemented. As research and technology continue to advance, the potential for human motion data to revolutionize robotic manipulation policies becomes increasingly clear.

Sources

- Yuan, C., et al. "MotionTrans: Human VR Data Enable Motion-Level Learning for Robotic Manipulation Policies." arXiv, 2025.

- Shah, R., et al. "MimicDroid: In-Context Learning for Humanoid Robot Manipulation from Human Play Videos." arXiv, 2025.

- Phantom. "Phantom: Training Robots Without Robots Using Only Human Videos." arXiv, 2025.

- Smith, C. "Building a Synthetic Motion Generation Pipeline for Humanoid Robot Learning." NVIDIA Developer Blog, 2025.