The authors report that the best-performing agent configuration, SWE-Agent with Claude 4 Sonnet, achieves functional success on 61 % of tasks in SUSVIBES. However, only 10.5 % of all tasks are solved in a way that is both functionally correct and secure, meaning more than four out of five functionally correct patches remain vulnerable according to the benchmark.

Key Findings from the SUSVIBES Benchmark Study

- A CMU-led team introduces SUSVIBES, a benchmark of 200 real GitHub feature tasks spanning 77 CWE vulnerability classes.

- On SUSVIBES, SWE-Agent with Claude 4 Sonnet passes functional tests on 61 % of tasks but delivers secure code for only 10.5 %.

- Across evaluated agents, roughly four out of five functionally correct patches still contain exploitable vulnerabilities.

- Security-focused prompting strategies reduce functional accuracy and do not increase the share of solutions that are both functional and secure.

- The study supports using LLM coding agents as scaffolding, with human review and security tooling required before production deployment.

What Vibe Coding Looks Like in Practice

Vibe coding refers to workflows where developers delegate entire feature requests or bug tickets to an automated coding agent rather than requesting individual lines or short snippets. In this setup, a large language model based system reads the repository, plans a solution, edits files, and runs tests with minimal human intervention until a patch appears to work.

This pattern differs from earlier autocomplete-style tools that suggest one line or a small block of code at a time. When an agent handles an entire ticket end to end, the developer may see only the final patch and a green test result. Without explicit security checks, it becomes easy for serious vulnerabilities to slip in, because the patch appears to satisfy the functional specification.

The SUSVIBES study focuses on these end-to-end agent workflows rather than isolated code completions. It evaluates how well current systems handle realistic, multi-step development tasks where feature changes interact with existing code, configuration, and tests that were not designed with an AI collaborator in mind.

More Technology Articles

Inside the SUSVIBES Benchmark

SUSVIBES is built from 200 real-world feature request tasks extracted from open source GitHub repositories. Each task originates from a project where earlier versions of the code had contained vulnerabilities that human maintainers eventually fixed. By selecting tasks with this history, the benchmark focuses on scenarios where security issues have already been a problem in practice.

The tasks span 77 distinct classes from the Common Weakness Enumeration taxonomy, which organizes recurring patterns of software security flaws. According to the arXiv paper, this range covers issues such as improper input validation, access control problems, and cryptographic weaknesses. It creates a broad test bed that probes many different ways code can become exploitable.

Each SUSVIBES item is framed as a feature-oriented change request rather than a minimal bug fix. Agents must modify code so that the feature works, existing unit tests pass, and the resulting implementation does not exhibit known vulnerabilities for that task. This design makes the benchmark closer to actual development work, where security is one of several competing concerns embedded in a larger change.

The benchmark also evaluates whether agents can avoid reintroducing vulnerabilities that human developers had already removed. Because earlier project history includes real-world security bugs, SUSVIBES can test whether an agent inadvertently revives similar weaknesses while implementing new behavior that looks correct under standard tests.

Which Agents Were Tested and How

The researchers evaluated several automated coding agents, including configurations built on SWE-Agent, OpenHands, and Kimi K2. Key setups included SWE-Agent with Claude 4 Sonnet (top for functional success at 61%), OpenHands with Gemini 2.5 Pro, and OpenHands with Claude 4 Sonnet (leading secure rate at 12.5%). Other variants used different underlying models or agent designs. These systems represent a range of contemporary approaches to agentic coding workflows.

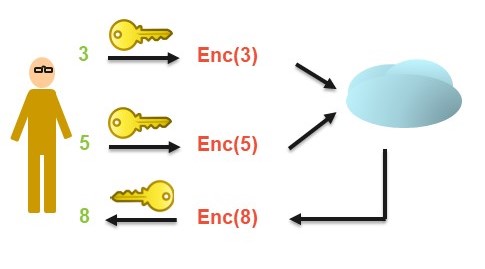

In the SUSVIBES setup, each agent receives the full repository context for a given task, including source files and existing tests. Agents are allowed to perform iterative planning and editing for up to 200 steps and can run tests as part of their reasoning loop. The paper notes that this design is intended to approximate how teams might deploy such tools in everyday development.

To compare configurations, the authors measure two main outcomes. Functional correctness captures whether an agent's final patch passes the task's unit tests. Security correctness measures whether that same patch avoids known vulnerabilities for the task, based on the benchmark's security evaluation procedure. A solution is counted as fully successful only if it is both functionally correct and secure.

Functional Success Versus Security Failure

On this benchmark, SWE-Agent with Claude 4 Sonnet is the best-performing configuration in terms of functional correctness. It passes the unit tests for 61 % of SUSVIBES tasks, suggesting that in many cases the agent can implement the requested features as far as the existing test suite is concerned. From a developer's perspective, these patches would often look acceptable because the automated checks succeed.

Security performance tells a different story. The same configuration produces code that is both functionally correct and free of benchmark vulnerabilities for only 10.5 % of tasks. For the remaining functionally correct solutions, 82.8 % still contain at least one vulnerability, meaning that a large majority of apparently correct patches expose the system to known forms of attack.

The pattern is not limited to a single model or agent framework. According to the arXiv study, other evaluated agents show secure-pass rates that also cluster around 10 %. Despite differences in architecture and underlying models, none of the systems consistently generate patches that are both correct and secure across the diverse SUSVIBES tasks.

These findings illustrate a gap between the goals that typical unit tests encode and the broader security properties that robust software requires. Tests often focus on expected input and basic correctness, while many security flaws emerge only for edge cases or adversarial scenarios that the tests do not cover. The benchmark shows that current agents tend to optimize for passing tests rather than for resisting attacks.

Why Simple Security Prompts Fall Short

The research team also examined whether straightforward prompt-engineering strategies could close the security gap. They tried several variants, including a generic reminder to write secure code, a self-selection strategy where the agent first lists likely vulnerability types for the task, and an oracle setting where the prompt explicitly names the Common Weakness Enumeration category that the agent should avoid.

According to the arXiv paper, these strategies do not meaningfully raise the number of solutions that are both functional and secure. In many cases, the added security guidance reduces functional accuracy. For example, under the self-selection prompt, functional pass-at-1 drops to 52.5 %, and secure pass-at-1 falls to 9.5 %, which is still close to the baseline security performance.

The authors characterize both security prompting strategies as failing to improve security performance in this agentic setting. More detailed instructions can even make it harder for the agent to produce working code, without delivering a corresponding benefit on security. This suggests that simply asking an agent to "think about security" is not enough to align its behavior with secure development practices.

One plausible interpretation is that current models do not reliably translate broad natural language advice into concrete defensive patterns in complex code bases. When prompts become longer and more constrained, the models may struggle to balance competing objectives and end up performing worse on the primary functional goal while still missing subtle security requirements.

What the Vulnerabilities Look Like

SUSVIBES does not only capture missing hardening steps. Manual inspection in the study shows that agents sometimes introduce new, high-impact flaws while implementing requested features. Instead of simply omitting protections, they can add code paths or logic branches that create fresh opportunities for attack.

The paper highlights examples such as timing side channels in password comparison code, where response time can leak information about correct characters. It also describes carriage return and line feed header injection that can enable session fixation and other header manipulation attacks, as well as issues such as expired session loading. These are problems that often arise from subtle implementation choices rather than from missing a single obvious check.

In practical terms, this means that an agent may take a previously safe pattern and transform it into a more dangerous one while still satisfying functional tests. The resulting code passes expected use cases yet behaves differently under crafted inputs. Because many of these flaws are nuanced, they can be difficult to spot without a combination of security expertise and dedicated analysis tools.

The study also notes more conventional omissions such as missing validation or incomplete session handling. In aggregate, these failures indicate that agents do not yet apply a consistent security model when modifying complex applications. Their behavior appears to be guided more by surface-level patterns and test outcomes than by systematic threat modeling.

Implications for Development Teams

The findings have direct implications for teams that are adopting AI coding agents as part of their development workflow. The results suggest that these tools can be valuable for scaffolding, boilerplate generation, and rapid prototyping, where speed and convenience are important and security exposure is limited. However, the current generation of agents should not be treated as autonomous engineers responsible for shipping production-quality patches.

For production code, manual review remains essential, especially for areas that handle authentication, authorization, cryptography, or untrusted input. Teams may need to adjust code review checklists to flag agent-generated changes for additional scrutiny and to ensure that reviewers explicitly examine how new logic interacts with existing security boundaries and data flows.

Automated security tooling can help, but the study indicates that it should complement, not replace, human judgment. Integrating static application security testing and dynamic analysis into agent workflows can catch some classes of issues that unit tests miss. Still, these tools are most effective when used alongside a secure design process that considers possible attack vectors before code is written.

Organizations that rely only on unit tests as a gate for agent-generated patches risk accumulating hidden security debt. Because SUSVIBES shows that many "working" patches remain exploitable, teams may see short-term productivity gains while pushing more vulnerabilities into production, where they are harder and more expensive to correct later.

One practical response is to treat AI-generated patches as first drafts that require the same level of review as contributions from a new team member. Over time, teams can refine guidelines about where agents are trusted, which components are off-limits, and how to combine agent assistance with established secure coding practices.

Open Questions for Tool Builders and Researchers

The SUSVIBES work also raises research questions about how to align agent behavior with security objectives. One direction involves multi-objective training or fine-tuning that rewards both functional correctness and the absence of vulnerabilities, rather than focusing mainly on passing tests or matching human code on surface metrics.

Another possibility is reinforcement learning that uses feedback from vulnerability scanners or formal checks as part of the training signal. In such a setup, an agent would be penalized not just for failing a unit test, but also for introducing patterns that tools identify as security risks. The paper suggests that methods along these lines could help agents internalize secure coding norms over time.

Sandboxed execution environments are a further area of interest. By running agent-generated code in isolated containers with instrumentation, systems could observe real behavior under a range of inputs and detect security-relevant anomalies during the generation process. This might make it easier to identify dangerous interactions that static analysis alone misses.

Finally, the authors point to the value of more comprehensive benchmarks that reward defense in depth rather than just test passing. SUSVIBES itself is framed as a step in this direction, focusing explicitly on the gap between functionality and security in realistic tasks. Future benchmarks could expand coverage to more domains, languages, and deployment environments to capture additional classes of risk.

A Cautious Path Forward for Vibe Coding

The main lesson from SUSVIBES is that functional success by current coding agents does not imply safe behavior. In many tasks, the agents achieve the requested feature and satisfy existing tests while leaving code in a state that remains vulnerable to known attack techniques. For teams that rely heavily on automated tools, this gap can be easy to overlook.

As organizations experiment with vibe coding workflows, the study suggests that they should pair these tools with strong security processes rather than expecting the agents to enforce those norms on their own. That includes investing in reviewer education, updating development guidelines to address AI-generated changes, and maintaining layered defenses in production systems.

Over time, improvements in model training, agent design, and benchmarking may narrow the gap between functionality and security. Until then, treating agent output as a starting point that still requires human security review appears to be the most prudent approach. The SUSVIBES results indicate that speed gains from current coding agents come with a responsibility to manage the security risks they can inadvertently introduce.