This explainer traces the road from early word-counting tricks to today’s giant language models. In everyday language, it shows what transformers are, how they work, and why the shift from sequential guessing to holistic pattern-matching opened the door to the current AI boom.

Life Before Transformers

In the 1990s search engines treated text like marbles in a jar: the more times a word appeared, the more it mattered. These “bag-of-words” methods counted tokens but ignored order, so “dog bites man” looked identical to “man bites dog.” Their simplicity powered early web search, yet they had no grasp of meaning.

A leap arrived in 2013 when researchers at Google released Word2Vec, showing how to place words as points in a high-dimensional space. Popular demonstrations later illustrated that relationships such as “king” minus “man” plus “woman” land near “queen,” as the original arXiv paper detailed.

Recurrent neural networks (RNNs) and their sturdier cousins, long short-term memory networks (LSTMs), then tried to read sentences one token at a time, passing a hidden state forward like a notepad. They often captured order but their memory faded with distance; asking them to relate the first clause of a paragraph to its last was like asking a sprinter to run a marathon.

Early attention mechanisms patched that gap. A 2014 study by Bahdanau and colleagues let translation models glance back at specific source words instead of trusting a single bottleneck vector—a hint that peeking at the whole sequence, if done efficiently, could trump plodding step by step.

More Technology Articles

The 2017 Breakthrough

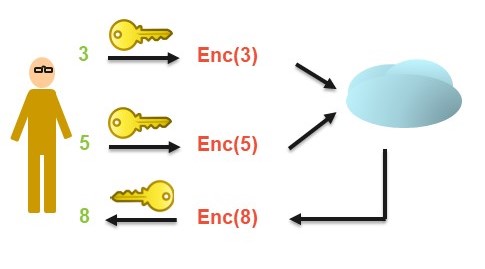

The dam burst when eight Google Brain researchers published “Attention Is All You Need” at the 2017 NIPS conference (now NeurIPS). Their proposal threw out recurrence entirely. Instead, every token produced three vectors—query, key, value—and compared queries against all keys in parallel, weighting the corresponding values to build new representations.

Because the comparisons form simple matrix multiplications, GPUs or TPUs can crunch them simultaneously. Translation models that once trained for weeks on recurrent architectures finished far faster, and the design handled substantially longer sentences without forgetting the start.

Benchmarks told the story: according to the 2017 paper, the original transformer set single-model state-of-the-art BLEU scores on the WMT-14 English-to-German (28.4) and English-to-French (41.8) translation tasks while training in just a few days on eight GPUs—far faster than comparable LSTM-based systems.

Self-attention did not merely refine older models; it rewired NLP’s core assumption. Language could be processed all at once, not spoon-fed token by token. That shift unlocked the scaling frenzy that followed.

From BERT to GPT and Beyond

Researchers soon stretched transformers to new limits. Google’s BERT, introduced in 2018 and described on arXiv, pre-trained on masked-word prediction in both directions, then fine-tuned easily for tasks from sentiment analysis to question answering.

OpenAI pushed the autoregressive side. Its 2020 research post on GPT-3 detailed a 175-billion-parameter model that displayed surprising few-shot skills.

The same year, Kaplan and colleagues quantified scaling laws: performance follows a predictable power-law curve with respect to model size, dataset tokens, and compute budget, giving labs a roadmap for bigger systems.

By 2023, open-source alternatives like Meta’s Llama models and the Hugging Face ecosystem lowered barriers for researchers and startups, pushing transformers into everything from legal analytics to indie game dialogue.

Inside Self-Attention

Imagine reading a sentence and, for each word, asking, “Whom should I pay attention to?” The transformer formalizes that intuition. Each word’s query vector is a search request; every other word’s key vector is a catalog entry. A dot-product scores how relevant each catalog item is, and the matching value vectors get blended—like gathering expert opinions weighted by relevance.

Multi-head attention repeats the procedure in parallel subspaces, so one head might track subject-verb agreement while another follows idioms. By stacking layers, the model refines fuzzy associations into sharper abstractions—the way early layers in a vision network detect edges before later layers see faces.

Because mathematical comparisons ignore order, the transformer adds positional encodings—trigonometric patterns or learned vectors—that give each token a sense of place. The result: global context without losing sequence.

For the layperson, a helpful metaphor is a committee meeting: every attendee hears every other attendee at once, weighs what they say according to role, and produces a new opinion. Older RNNs resembled a game of telephone—messages passed sequentially, degrading with each handoff.

Why Transformers Beat the Old Guard

First, parallelism. Self-attention lets hardware process tokens side by side, so training scales almost linearly with GPU count. RNNs, chained by their hidden state, can’t easily break the loop.

Second, global context. At each layer, every word sees every other word, enabling the model to connect distant clauses—a necessity for legal briefs, research papers, or novel chapters where key information may be pages apart.

Third, architectural flexibility. The same attention blocks now power vision transformers, speech-to-text systems, protein-folding predictors, and even reinforcement-learning policies. A uniform recipe means advances in one domain often transfer with minor tweaks. An introduction and catalog appeared on arXiv in 2023.

Finally, scale friendliness. Because the cost of adding parameters is balanced by predictable gains, labs can justify gargantuan budgets: spend more compute, harvest more capability. The open question is when diminishing returns will win the race.

Impact in the Real World

Search engines now use transformer-based re-rankers to gauge not just keyword overlap but intent; Google said in 2019 that adding BERT helped parse conversational queries.

Compact transformer variants enable on-device translation and live speech captioning, while larger generative models fine-tuned on code or scientific literature assist professionals with drafting and discovery.

GitHub’s Copilot, historically powered by an OpenAI Codex transformer, now suggests whole functions to millions of developers—a shift that MIT Technology Review likened to predictive text for software.

Open Questions and Active Research

Compute and energy cost now loom large. Researchers estimate that training GPT-4-class models consumes megawatt-hours of electricity, and climate-conscious labs explore sparse attention or quantization to shrink the footprint.

Context windows, though far longer than RNN limits, still cap at tens of thousands of tokens. New prototypes propose linear-time or memory-efficient attention kernels to stretch those horizons.

Hallucinations—confident but false outputs—stem from models optimizing for plausibility, not truth. Retrieval-augmented generation, where the transformer consults external databases mid-sentence, aims to ground answers in verified text.

Multimodal and mixture-of-experts designs route queries through specialized subnetworks, promising more accurate answers at lower cost. Regulators in several jurisdictions have signaled interest in audit requirements to ensure such systems respect privacy and copyright.

What Comes Next

Researchers foresee kilometer-long context that can swallow entire textbooks, plus fine-grained control knobs for style or source attribution. Modular routing may let a math expert sub-model solve equations while a legal sub-model handles compliance—all under the transformer umbrella.

Policy debates will shape the landscape as much as math. Proposals for usage disclosures, bias audits, and energy caps could push innovation toward efficiency rather than brute-force scale.

Yet the central insight—tokens attending to every other token—seems poised to persist. Whether future systems graft neural chips onto memory pools or weave symbolic reasoning into the loop, self-attention has proven a versatile scaffold.

Transformers turned language modeling from a narrow relay race into a stadium-wide conversation where every participant hears everyone else. The ripple effects—instant translation, conversational AI, protein mapping—are still spreading. However the next act unfolds, it will likely build on the blueprint sketched in 2017.

That makes the transformer not just another algorithm but a linguistic Rosetta Stone—one that let machines read between the lines, and in doing so, rewrote the rules of human-computer interaction.

Sources

- Mikolov, T. et al. "Efficient Estimation of Word Representations in Vector Space." 16 Jan 2013.

- Bahdanau, D. et al. "Neural Machine Translation by Jointly Learning to Align and Translate." 1 Sep 2014.

- Vaswani, A. et al. "Attention Is All You Need." 12 Jun 2017.

- Devlin, J. et al. "BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding." 11 Oct 2018.

- Brown, T. B. et al. "Language Models Are Few-Shot Learners." 28 May 2020.

- Kaplan, J. et al. "Scaling Laws for Neural Language Models." 21 Jan 2020.

- Amatriain, X. et al. "Transformer Models: An Introduction and Catalog." 12 Feb 2023.

- Xiao, T.; Zhu, J. "Introduction to Transformers: an NLP Perspective." 29 Nov 2023.