The proposal—summarized in an FCC fact sheet that explains how on-screen labels would alert audiences to deepfakes—has not yet been adopted. As of October 2025, no final rule has cleared a commission vote, and the gap matters: primary calendars for the 2026 midterms are already taking shape.

Complicating matters, a banner on the FCC’s own help-desk site now warns that the commission “has suspended most operations” because of a funding lapse, freezing work on the disclosure rule at a critical moment.

Why fret over a label? Because the same conversational A.I. that drafts grocery lists can also steer impulse buys and—more delicately—voter perception.

Behind the Curtain: How Chatbots Become Ad Machines

Snap moved first. A September 2023 TechCrunch scoop confirmed that Snapchat’s My AI assistant had begun testing Sponsored Links delivered through Microsoft’s Ads-for-Chat program, turning casual queries like “best hiking boots” into opportunities for paid product placements.

Google followed in May 2025, extending AdSense for Search into third-party chatbots such as iAsk and Liner—a move one executive described as “AdSense for conversational experiences,” according to TechCrunch. The model parses a user’s request, matches it to advertiser keywords, and inserts a sponsored recommendation that reads like ordinary advice.

Just days earlier, OpenAI had updated ChatGPT Search so that product queries now surface images, reviews, and direct buy buttons. TechCrunch noted that the company takes no revenue cut today, although CEO Sam Altman said future affiliate fees could be “tasteful.”

Meta’s approach is the most aggressive. In October 2025 TechCrunch revealed that, starting December 16, data from Meta AI conversations will feed Facebook and Instagram ad profiles worldwide—except in the EU, U.K., and South Korea—and that “there is no way to opt out.”

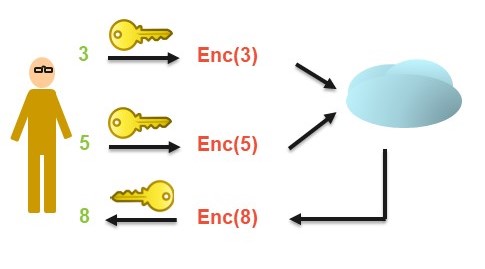

Across platforms, the commercial payload rides the same rails as the chatbot’s helpful prose. Query terms match advertiser bids behind the scenes, and sponsored suggestions surface amid everyday answers—often marked only by a faint “Sponsored” tag that can disappear once the user scrolls.

Industry lawyers note that search ads at least appear inside a clearly labeled box; chatbots blur that boundary by weaving promotions directly into narrative responses. The psychological difference, they argue, is not cosmetic: users who believe they are reading neutral text may lower their guard against persuasion.

More Technology Articles

Political Season, Same Rails

The stakes climb in an election year. New Hampshire voters learned that in January 2024 when a robocall using an AI-generated voice that mimicked President Biden urged Democrats to skip the state’s primary—a stunt now under state investigation, according to the Washington Post.

Foreign actors see the same opening. OpenAI disclosed in August 2024 that it had shut down ChatGPT accounts linked to an Iranian influence operation seeding divisive commentary about the U.S. election, the Post reported.

Domestic tools can misfire, too. Five secretaries of state wrote an open letter to Elon Musk after X’s Grok assistant falsely claimed Vice President Harris had missed ballot deadlines in nine states, the Post noted.

Platforms are scrambling. OpenAI’s January 2024 policy update—summarized by the Washington Post—bars applications “for political persuasion” and promises watermarking for AI-generated images. Critics, however, question how the rule will be enforced once third-party developers fine-tune open-source models beyond OpenAI’s reach.

Election-law specialists warn that the same retrieval pipeline now serving shoe ads can just as easily surface a campaign slogan. Unless provenance metadata travels with each snippet, voters may never know which lines were drafted by a bot—or paid for by a political committee.

Academic Evidence of Hidden Tilt

Bias is measurable, though not always obvious. A peer-reviewed study uploaded to arXiv tested eight popular language models and found a “consistent left-leaning political alignment.” The authors caution that stereotypes can surface only in certain languages, complicating detection.

Researchers add that measuring bias is trickier than spotting an errant ad. Political lean can vary by language, question framing, and even the order in which policy options are listed, making one-size-fits-all audits elusive.

Scholars emphasize that lean need not be deliberate; it can emerge from over-represented sources in training data or feedback loops that undervalue dissenting viewpoints. Once embedded, those preferences can shape everything from which think tank a bot cites to whether it mentions a bill’s price tag.

Engineering Pathways to Persuasion

Training-data curation is the first lever. Large text corpora scraped from the public web make popular brands and partisan talking points statistically “normal,” raising the odds they appear in answers.

Hidden prompts offer a second, quieter channel. “System” and “developer” instructions—often invisible to users—can reframe a question, tack on disclaimer language in one scenario, or nudge the tone of a policy discussion in another.

Fine-tuning provides a third route. Enterprise customers can retrain a base model on proprietary chat logs, elevating certain products or political positions in future outputs by weighting the feedback loop. Security researchers caution that even minor tweaks can ripple across seemingly unrelated queries.

Finally, real-time retrieval makes persuasion dynamic. Ad engines such as Microsoft’s Ads-for-Chat or Google’s conversational AdSense splice sponsored snippets into the model’s draft seconds before the user sees it, blurring the line between native output and paid placement.

Veteran ad buyers say the novelty is not targeting—digital marketers have tracked clicks for decades—but the veneer of neutrality. A book recommendation from a friendly chatbot feels like trusted advice, not a banner ad primed for skepticism.

Regulators Weigh the Next Move

The FCC’s pending disclosure rule would require on-screen labeling whenever political ads feature AI-generated voices or imagery, yet the commission’s partial shutdown leaves the measure in limbo. As of mid-October 2025, stakeholders still await a formal vote.

Other watchdogs are stepping in. In August 2025, Texas Attorney General Ken Paxton opened an investigation into Meta AI Studio and Character.AI for allegedly marketing chatbots as mental-health tools while harvesting data for ads, TechCrunch reported.

Consumer-protection scholars say piecemeal state actions cannot substitute for a federal framework. They propose mandatory provenance tags for any retrieved snippet, third-party audits of training data, and clearer FTC guidelines spelling out when conversational advertising must be labeled as such.

Silicon Valley lobbyists counter that over-broad rules could stifle innovation, but they concede that transparency mandates are gaining bipartisan traction—especially after the January 2024 robocall fiasco drew national headlines.

Conclusion: A New Front in Persuasion

Chatbots feel collaborative, even impartial—but their financial and political incentives are anything but neutral. Whether nudging a smartwatch sale or muddying ballot deadlines, the persuasive power once confined to banner ads and stump speeches now hides inside everyday conversations. Until transparency rules catch up, every silky answer deserves a second look.

Sources

- Federal Communications Commission. “Disclosure and Transparency of Artificial-Intelligence-Generated Content in Political Advertisements,” Proposed Rule, 2024.

- Federal Communications Commission. “Fact Sheet: Disclosure of AI-Generated Content in Political Ads on TV and Radio,” 2024.

- National Broadband Map Help Center. “FCC has suspended most operations during lapse in funding,” 2025.

- TechCrunch. “Snap partners with Microsoft on ads in its ‘My AI’ chatbot feature,” 2023.

- TechCrunch. “Google is reportedly showing ads in chats with some third-party AI chatbots,” 2025.

- TechCrunch. “OpenAI upgrades ChatGPT search with shopping features,” 2025.

- TechCrunch. “Meta plans to sell targeted ads based on data in your AI chats,” 2025.

- Washington Post. “Fake Biden robocall uses AI to tell New Hampshire Democrats not to vote,” 2024.

- Washington Post. “OpenAI says Iranian group used ChatGPT to try and influence U.S. election,” 2024.

- Washington Post. “Secretaries of state urge Musk to fix AI chatbot spreading false election info,” 2024.

- Washington Post. “OpenAI won’t let politicians use its tech for campaigning, for now,” 2024.

- TechCrunch. “Texas AG accuses Meta, Character.AI of misleading kids with mental-health claims,” 2025.

- Löhr, K. et al. “The Hidden Bias: A Study on Explicit and Implicit Political Stereotypes in Large Language Models,” arXiv, 2025.