Industry and federal partners include Intel, Broadcom, the Federal Highway Administration, and the National Institute of Standards and Technology. The 10 projects develop practical privacy-preserving data sharing and analytics solutions across sectors such as healthcare, transportation, agriculture, and cybersecurity. They use techniques like federated learning, secure multiparty computation, differential privacy, and trusted execution environments.

The program emphasizes real-world deployment, industry collaboration, and community testbeds. Its goal is to move privacy-enhancing technologies from theory into tools that public agencies and companies can deploy.

Key Points

- NSF TIP announced $10.4 million over three years for 10 PDaSP privacy-tech projects

- Awards back practical privacy-preserving data sharing tools, testbeds, and benchmarks

- Techniques include differential privacy, federated learning, secure multiparty computation, and trusted hardware

- Target sectors span healthcare, transportation, agriculture, cybersecurity, and genomics

- Partners include NSF CISE, Intel, Broadcom, FHWA, and NIST

- Example awards build transportation, genomics, and federated-learning platforms for real-world use

Policy Context and Program Structure

The Privacy-Preserving Data Sharing in Practice program was first outlined in a 2024 funding solicitation archived on the NSF website. That document frames PDaSP as supporting the advancement of privacy-enhancing technologies and their use in solving real-world problems. This falls within NSF TIP's broader portfolio of use-inspired and translational research aimed at U.S. competitiveness.

The solicitation links PDaSP directly to the 2023 Executive Order on the Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence. This order directs NSF to prioritize research that encourages adoption of privacy-enhancing technologies and to develop testing environments, including testbeds for both safe AI technologies and associated privacy tools.

It also cites the National Strategy to Advance Privacy Preserving Data Sharing and Analytics, describing PDaSP as addressing that strategy's priority to accelerate transition to practice. This is achieved by promoting applied research, building tool repositories, and developing measurement methods and benchmarks.

PDaSP is structured around three tracks reflecting different maturity stages. Track 1 advances key technologies for privacy-preserving solutions, with budgets of $500,000 to $1 million for up to two years. Track 2 funds integrated solutions for specific application settings, with budgets of $1 million to $1.5 million for up to three years. Track 3 supports tools and testbeds, with awards of $500,000 to $1.5 million for up to three years.

More Technology Articles

Program Partners

The December 2025 announcement identifies NSF TIP and NSF CISE as collaborating directorates. Supporting partners include Intel, Broadcom, the Federal Highway Administration, and NIST. The earlier solicitation similarly lists Intel and names VMware as industry partners alongside FHWA and NIST.

The structure welcomes new partners from public and private sectors, with proposals considered for co-funding based on matching interests. This signals PDaSP as a multi-party initiative rather than a purely academic one.

In an official statement, NSF TIP assistant director Erwin Gianchandani said that "NSF is prioritizing investments in critical technologies to ensure a reliable and practical AI future while at the same time positioning the U.S. as a global leader in AI." He added that outcomes are expected to help government agencies and private industry adopt privacy-enhancing technologies and use data for the public good.

Program materials consistently emphasize testbeds, measurement methods, and benchmarking as core elements needed to translate these technologies into routine practice.

Examples from the First Cohort: Transportation

One Track 2 project titled "A Holistic Privacy Preserving Collaborative Data Sharing System for Intelligent Transportation" is led by Iowa State University researcher Meisam Mohammady. The award develops a comprehensive platform for sharing diverse intelligent transportation systems data.

This includes naturalistic driving data from in-vehicle sensors, traffic conditions, road conditions, and videos—while protecting individual privacy. The project adapts and scales privacy-preserving techniques for both centralized and distributed data sharing models.

It also develops a web-based recommendation system to help stakeholders select suitable privacy techniques for specific datasets, and creates audit and compliance tools based on formal privacy guarantees. Iowa State's funding is $232,000, with collaboration from the Department of Transportation, the University of Connecticut, the Illinois Institute of Technology, and the University of Washington.

Examples from the First Cohort: Machine Learning Infrastructure

A Track 3 award led by Harvard University, titled "Rigorous and Performant Differentially Private Machine Learning via OpenDP," focuses on integrating strong privacy protections into machine learning workflows. Many AI systems are trained on datasets containing sensitive information in domains such as government services, healthcare, and education and can inadvertently reveal that information.

The project develops freely available software tools that make state-of-the-art privacy protection methods practical and accessible. It implements new techniques for training large models with differential privacy while keeping computational overhead manageable.

This is achieved by integrating differentially private stochastic gradient descent into the OpenDP open-source library and connecting them with Opacus. The team will also optimize noise distributions and develop privacy accountants that measure guarantees as training proceeds. The award is $800,000.

Examples from the First Cohort: Genomics

A Track 2 award titled "Confidential Genome Imputation and Analytics (CoGIA)" led by Yale University develops secure methods that let users analyze their own genetic data using controlled-access reference datasets without compromising privacy. Large-scale genetic datasets are important for understanding genetic variation and health impact, but access is often restricted due to privacy concerns.

Yale will develop deployment-ready algorithms and software for genome imputation and analytics services running in trusted execution environments. They will use data-oblivious techniques that keep observable system behavior uniform to reduce side-channel risks.

The project includes designing a multi-layered security framework with data transformation, usage monitoring, and testing environments, and extending methods to a federated network of genomic repositories. Yale receives $1.2 million, with the University of Maryland as a sub-awardee.

Examples from the First Cohort: Federated Learning Testbed

A Track 3 project at the University of Massachusetts Amherst, "Testbed for Enhancing Privacy and Robustness of Federated Learning Systems," responds to the lack of standardized assessment tools for models trained across multiple data sources. FLTest will automate privacy and robustness evaluations using automated test orchestration, privacy attack simulation, configuration vulnerability detection, and recommendation engines.

The platform streamlines evaluations with automated orchestration and detects configuration issues and vulnerabilities. The team will validate FLTest across multiple domains and datasets, create standardized benchmarks, and develop detailed reporting for security analysis. The award is $366,669, with collaboration from three industry partners.

Technical Approaches

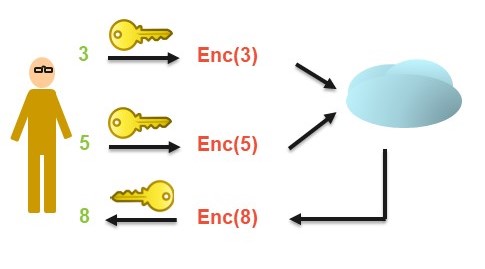

Differential privacy, which adds carefully calibrated statistical noise so results do not reveal individual inclusion in a dataset, is central to the Harvard project. By embedding differentially private stochastic gradient descent into open-source libraries like OpenDP and Opacus, the project makes formal privacy guarantees more accessible to practitioners.

This approach also provides clear accounting throughout training. Trusted execution environments—hardware-supported secure areas that process sensitive data with reduced leakage risk—are central to the Yale genome analytics project.

Data-oblivious algorithms maintain uniform observable behavior, while multi-layer security controls combine transformation and monitoring. The methods extend to federated networks of genomic repositories to enable cross-institutional analytics while keeping raw data within each repository.

The Iowa State transportation project integrates existing and new privacy-preserving techniques into intelligent transportation systems workflows through the PAIR framework. It provides a web-based recommendation system for appropriate techniques and builds audit and compliance tools that formalize privacy guarantees for stakeholders.

FLTest at UMass Amherst focuses on systematic evaluation through automated orchestration and attack simulation models to test federated learning systems. By providing benchmarks, configuration checks, and standardized reporting, the platform helps both novice and expert users understand how well their systems protect data across heterogeneous datasets.

Implications and Long-Term Outlook

Both the solicitation and December announcement stress that PDaSP aims at deployment rather than only conceptual advances. This emphasis shapes project structure, with many awards including software platforms, user studies, and security evaluation mechanisms.

By citing the 2023 AI executive order and the National Strategy, NSF positions PDaSP as one route to meeting federal directives on privacy and trustworthy AI. The Harvard and UMass Amherst awards target the infrastructure used to train and evaluate AI models in sensitive domains.

Meanwhile, the transportation and genomics projects show how privacy technologies are being tailored to specific sectoral needs. The PAIR framework addresses intelligent transportation systems relying on high-resolution mobility data, while CoGIA addresses confidentiality of large genomic reference datasets.

Together these illustrate PDaSP funding both cross-cutting tools and domain-focused platforms. Awards run through late 2028 for several projects, giving teams time to complete field testing, produce open or shared software, and document benchmarks.

How far these outputs shape routine practice in AI development and data governance will become clearer as projects progress and additional award details become public.

Sources

- U.S. National Science Foundation. "NSF invests over $10M to advance U.S. leadership in privacy-enhancing technologies and accelerate their use for real-world solutions." National Science Foundation, 2025.

- U.S. National Science Foundation. "Privacy-Preserving Data Sharing in Practice (PDaSP) solicitation, NSF 24-585." National Science Foundation, 2024.

- Iowa State University Department of Computer Science. "Meisam Mohammady Awarded NSF Grant." Iowa State University, 2025.

- National Science Foundation. "PDaSP Track 2: A Holistic Privacy Preserving Collaborative Data Sharing System for Intelligent Transportation." NSF Award Abstract, 2025.

- National Science Foundation. "PDaSP: Track 3: Rigorous and Performant Differentially Private Machine Learning via OpenDP." NSF Award Abstract, 2025.

- National Science Foundation. "PDaSP Track 2: Confidential Genome Imputation and Analytics (CoGIA)." NSF Award Abstract, 2025.