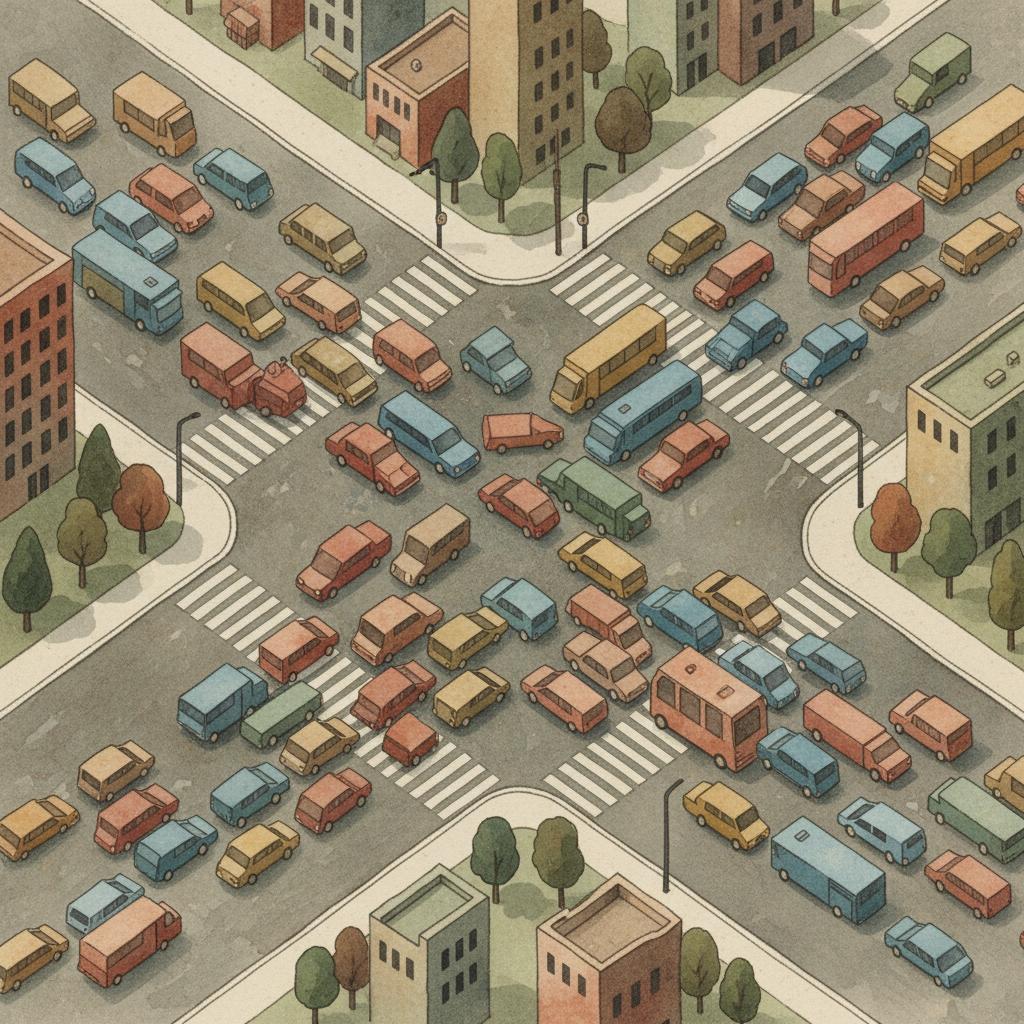

Within that total, bad bots that impersonate users, probe application programming interfaces, or inflate metrics represented 37 percent of all traffic. Imperva’s historical figures show a steady rise in automated activity over multiple years rather than a single spike.

This indicates that what began as occasional scraping has become continuous automated interaction with many public-facing services.

Researchers and platform operators have anticipated a numerical crossing point when automated traffic would exceed human web traffic, but they weigh the practical consequences differently. Security teams see higher fraud and abuse risk, while marketers face analytics distorted by non-human visits.

Machine-learning practitioners must also consider how much of the text and image data they collect from the open web reflects human expression rather than scripted automation.

Key Findings on Internet Automation

- Bots generated 51 percent of web traffic in 2024, exceeding human activity.

- Bad bots accounted for 37 percent of total traffic in Imperva’s data.

- NewsGuard identified 2,089 largely undisclosed AI-run news and information sites.

- Research shows that training on AI-generated text can trigger model collapse.

- Human-curated, well-sourced content gains relative value as synthetic material spreads.

How Automated Traffic Became the Majority

Imperva attributes the rise in automated traffic to a combination of scalable crawlers, improved evasion techniques, and wider use of AI to generate and coordinate scripts that can mimic human behavior across multiple sites.

According to the same Imperva analysis, attackers now use AI to refine unsuccessful attempts and adjust bot patterns in response to defenses. As defensive systems improve at detecting simple scripts, more activity shifts into sophisticated bots that rotate identities, vary behavior, and adapt to new countermeasures.

High-value platforms in sectors such as retail and travel have seen a notable share of advanced bot activity aimed at APIs. There, automated requests can support account takeover, credential stuffing, or inventory manipulation.

Imperva reports that API-directed attacks account for a large fraction of advanced bot traffic, which creates operational pressure on organizations that expose many functions through programmable interfaces.

In response, organizations have invested in bot-detection tools, rate limits, and behavioral analysis, but these controls can also introduce friction for legitimate visitors. When bot detection relies on aggressive challenges or blocks entire network segments, users with strict privacy settings or atypical browsing patterns can encounter more barriers even as malicious automation grows more capable.

The numeric majority of automated traffic therefore reflects both technical progress on the attacker side and uneven adoption of effective defenses. As long as attackers can exploit low-cost AI tools and many sites rely on heterogeneous protection strategies, analysts expect the proportion of automated requests to continue increasing, even if absolute human activity also grows.

More Technology Articles

Industrial-Scale AI News Sites

Traffic automation is mirrored by automation at the publisher level. As of October 2025, the AI Tracking Center run by NewsGuard listed 2,089 news and information sites that operate with little to no human oversight and rely primarily on generative models for story production, spanning 16 languages.

NewsGuard reports that these domains often use generic names and designs that resemble established regional or topical outlets, making them appear to be conventional news sites. Behind that surface, articles are written largely or entirely by automated systems that generate headlines and copy on a wide range of subjects.

Most of the tracked sites do not prominently disclose automated authorship. About and contact pages frequently offer limited detail about ownership, editorial standards, or review processes.

This lack of transparency complicates evaluation for readers who first encounter the material through search snippets, recommendation feeds, or social media shares.

NewsGuard’s earlier reporting describes many of these outlets as content farms that publish large volumes of generic stories. The focus on quantity over distinct editorial voice, combined with low marginal costs enabled by AI tools, allows operators to expand into many niches and languages without proportionate investment in staff.

Programmatic advertising provides a revenue path for such sites because ads follow audience impressions rather than editorial reputation. When automated publishing can generate large numbers of pages with minimal direct oversight, even modest traffic per page can add up to a viable business if ad systems treat these domains similarly to human-edited outlets.

Model Collapse Risk in Synthetic Training Data

Automation that reshapes public text has implications for future model training. The 2023 paper "The Curse of Recursion: Training on Generated Data Makes Models Forget" released on arXiv examines what happens when models are repeatedly trained on data that increasingly consists of their own outputs or the outputs of similar systems.

The authors show that when synthetic data produced by generative models replaces a growing share of original training data, the resulting models lose information about the tails of the original distribution. They refer to this degradation as model collapse and document it in several classes of generative models, including large language models, variational autoencoders, and Gaussian mixture models.

The study warns that large-scale web scraping in an environment saturated with generated content can reproduce these dynamics if synthetic material is not identified and controlled. The authors argue that data drawn from genuine human interactions with systems becomes more valuable as synthetic outputs occupy a larger fraction of publicly available text, because such human data helps preserve rare patterns that would otherwise disappear.

Why Human-Curated Content Gains Market Value

As synthetic material grows more common, content with clear authorship, accessible citations, and transparent correction policies becomes easier to distinguish from generic automated pages. For readers, these attributes offer practical cues about reliability and make it possible to trace specific claims back to verifiable primary sources.

For organizations that train or fine-tune models, human-curated publications can serve as more dependable training data than unfiltered web crawls that mix human and machine-generated text. Drawing on such sources does not eliminate error, but it reduces exposure to recursive training on synthetic outputs that "The Curse of Recursion" identifies as a driver of model collapse.

Economic signals reflect this differentiation. Outlets that invest in editing, fact-checking, and documented standards can command higher subscription prices, licensing fees, or advertising rates than anonymous content farms of similar size.

Automated publishing still benefits from scale advantages, but identifiable human input functions as a quality marker in an environment where many pages otherwise resemble one another.

For model builders, relying more heavily on curated material usually raises data acquisition and processing costs compared with scraping large volumes of open web text. That trade-off mirrors the reader’s choice between spending more time or money to seek out vetted sources and accepting the convenience of undifferentiated search results.

The numerical flip identified by Imperva marks a clear shift in what generates most routine web traffic. Combined with AI-written pages catalogued by NewsGuard and the degradation risks outlined in academic work, the rise of automated activity now shapes the operating environment for security, publishing, and machine-learning teams.

Whether defensive standards, disclosure practices, and training-data safeguards evolve alongside this trend will influence the reliability of information available online over the next decade. Outcomes will depend less on a single technical breakthrough than on sustained investment in provenance tracking, transparent labeling, and incentives that reward verifiable human judgment.

Sources

- Imperva. "2025 Bad Bot Report." Imperva, 2025.

- NewsGuard AI Tracking Center. "Tracking AI-Enabled Misinformation: Over 2000 Undisclosed AI-Generated News Websites." NewsGuard, 2025.

- Shumailov, Ilia et al. "The Curse of Recursion: Training on Generated Data Makes Models Forget." arXiv, 2023.